Background

The ZARM-led MaMBA project is building a Mars habitat prototype to enable human life there. We partnered with them to develop technology for these future habitat stations.

Our goal was to design and develop a technology product that supports scientists in their day-to-day tasks on an extraterrestrial mission.

This case study covers the design process of the publication “Conversational User Interfaces to support Astronauts in Extraterrestrial Habitats”, published in December 2021 at International Conference on Mobile and Ubiquitous Multimedia (MUM) Conference in Leuven, Belgium.

Challenges

We talked to the scientists at MaMBA to uncover their needs and pain points when performing their day-to-day tasks on an extraterrestrial mission.

For example, here are the discovered challenges that the scientists face on such missions.

Complex Laboratory Tasks

Small-Sized Habitat

Mental Distress

Slow Internet Connection

My Responsibilities

Stakeholder/discovery interviews

Facilitating brainstorming workshop

Defining solution functionalities

Designing voice and visual user interface

Implementing the voice user interface (Python & Java)

Usability tests

Impacts

Creation of CASSIOPEIA, a compact decentralized voice assistant designed for use in a scientific habitat. It guides crew members through experiments with verbal input and visual/auditory output.

Validated design implications for multi-modal user interfaces

1 / 6

Jobs-to-be-Done

We summarized the information gathered from the discovery phase into these JTBD:

Use Case: Lab Experiment Assistant

Job: To efficiently complete laboratory tasks in a remote environment.

Needs: Accuracy, access to relevant information and protocols.

Pain Points: Manual tasks taking too long, difficulty accessing information, potential for human error.

Requirement: Hands-Free Interaction

Job: To control the virtual lab assistant without interrupting ongoing experiments.

Needs: Voice commands, gesture recognition, or other hands-free control methods.

Pain Points: Needing to stop tasks to interact with the assistant.

Requirement: Works Offline

Job: To access and utilize the virtual lab assistant regardless of internet connectivity.

Needs: Offline functionality, data storage and processing onboard, ability to synchronize with online resources when available.

Pain Points: Delays or interruptions in work due to unreliable internet, potential data loss.

Requirement: Compact Size

Job: To utilize the virtual lab assistant within the limited space constraints of the habitat.

Needs: Minimal footprint, portable design, efficient storage options.

Pain Points: Limited workspace cluttered by bulky equipment, difficulty accessing or using the assistant due to size constraints.

2 / 6

System Design

CASSIOPEIA is a compact decentralized voice assistant designed for use in a scientific habitat. It guides crew members through experiments with verbal input and visual/auditory output.

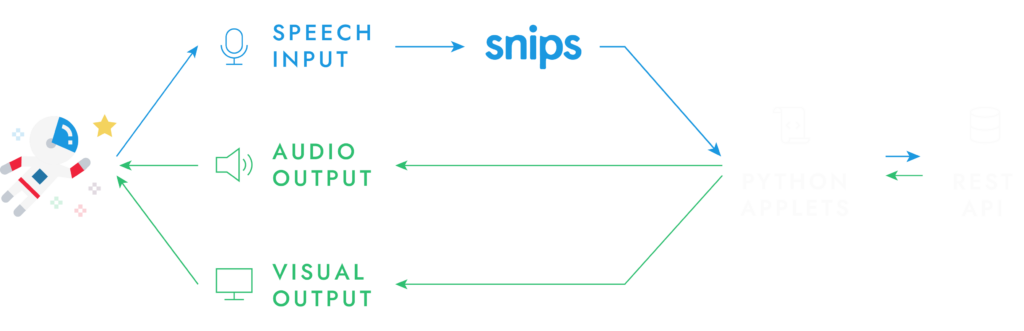

The system consists of 4 components: speech recognition, command execution, text-to-speech, and a graphical user interface.

Speech Recognition & Text-to-Speech: SNIPS

We utilized SNIPS, an AI-powered speech recognition service, for this purpose.

Command Execution: Python Applets

The speech recognition module triggers applets within the command execution module based on the user’s intended action. These applets facilitate communication between the database server, text-to-speech module, and graphical user interface.

Graphical User Interface

The web interface acts as a guide for voice interaction, showcasing available commands and options. Unlike typical interfaces, there are no buttons or menus to click on with a mouse or touch screen.

3 / 6

User Flow

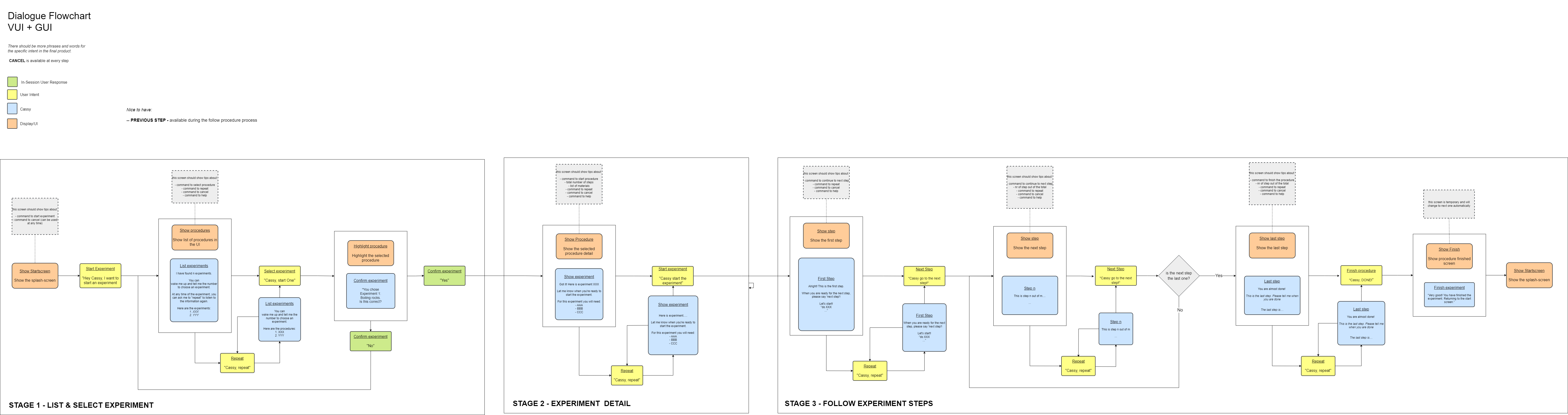

We tried different ways to guide crew members through lab procedures. For this use case, we found it worked best to have a predefined, step-by-step procedure instead of relying on spontaneous instructions.

The system could understand the sounds (phonology), sentence structure (syntax), and meaning of words (semantics) in user messages. But it struggled with what’s meant beyond the words themselves (pragmatics). For example, “I’m done” can have different meanings depending on when it’s said. To fix this, we made the system track conversation stages and use that info to understand the real meaning behind a message.

To develop the most user-friendly instructions for lab procedures, I collaborated with other designers in my team on role-playing exercises. These exercises helped us identify the most intuitive message content and order of information for guiding crew members effectively.

To maintain dialog consistency, we defined a couple of guidelines for the voice interface:

The VUI should provide the same information as the GUI at the same time.

The users should always be aware of their current status and available actions.

The system should allow users to repeat messages, cancel actions, and quit the program.

Users should be able to navigate between steps during instructions.

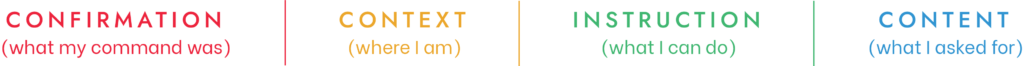

Messages should be clear and concise which follow this order:

Why is the main content placed before the instruction?

An internal preliminary test showed that putting the main content before the instruction led to distractions and confusion. This is because the crew member’s short-term memory fills up and pushes older information out to make space for the new information. Therefore the main content is always placed last in the message.

4 / 6

Design

The GUI was created to improve the conversational interface by solving issues such as:

Users can’t recall available commands

Users can’t recall the main content

Instructions are too complex

Usability tests with GUI showed improvements in speed and accuracy compared to the version without a GUI.

The GUI features:

Large, readable components

A progress bar

Suggestions of available voice commands

(The way hints were displayed made people wait for them before speaking, even though the system could handle more. We changed the order and wording to show the most important hints first and make it clear they were just options, not rules.)

5 / 6

Final Evaluation

Participants

- 12 English-speaking participants experienced in lab work.

- All of them have heard of voice assistants, only 25% had experience with such technology

Method

- In-person, moderated, between-subject (A/B) test

- Post-evaluation questionnaires (SUS + PSSUQ)

- Post-evaluation interview

6 / 6

Findings

Multimodality Enhances Efficiency and Learnability

A graphical interface (GUI) makes the system easier to learn, especially for new users. It helps them get started quickly and reduces the need to repeat instructions.

Front-Facing Output Is Preferred

Users tended to face the device’s display or speaker during interaction, regardless of its position. This suggests a preference for visual information alongside audio cues.

Audio Quality Is Important

A good voice assistant relies on a natural-sounding TTS engine and speaker. This means the voice should have a pleasant pace, accent, and intonation, and use natural pauses and stops.

Key Takeaways

Overall, the case study showcases the successful design and optimization of Cassiopeia, a compact and user-friendly lab assistant tailored for extraterrestrial missions.

Multimodality (a combination of voice commands and a graphical interface) enhanced efficiency and learnability.

Clarity is key. Large text, high color contrast, and natural-sounding speech are all crucial for a good user experience.

Users needs a cue of the recipient device while interacting with voice assistants.